A Field Test of Software Transactional Memory Using the RSqueak Smalltalk VM

Extending the Smalltalk RSqueakVM with STM

by Conrad Calmez, Hubert Hesse, Patrick Rein and Malte Swart supervised by Tim Felgentreff and Tobias Pape

Introduction

After pypy-stm we can announce that through the RSqueakVM (which used to be called SPyVM) a second VM implementation supports software transactional memory. RSqueakVM is a Smalltalk implementation based on the RPython toolchain. We have added STM support based on the STM tools from RPython (rstm). The benchmarks indicate that linear scale up is possible, however in some situations the STM overhead limits speedup.

The work was done as a master's project at the Software Architechture Group of Professor Robert Hirschfeld at at the Hasso Plattner Institut at the University of Potsdam. We - four students - worked about one and a half days per week for four months on the topic. The RSqueakVM was originally developped during a sprint at the University of Bern. When we started the project we were new to the topic of building VMs / interpreters.

We would like to thank Armin, Remi and the #pypy IRC channel who supported us over the course of our project. We also like to thank Toni Mattis and Eric Seckler, who have provided us with an initial code base.

Introduction to RSqueakVM

As the original Smalltalk implementation, the RSqueakVM executes a given Squeak Smalltalk image, containing the Smalltalk code and a snapshot of formerly created objects and active execution contexts. These execution contexts are scheduled inside the image (greenlets) and not mapped to OS threads. Thereby the non-STM RSqueakVM runs on only one OS thread.

Changes to RSqueakVM

The core adjustments to support STM were inside the VM and transparent from the view of a Smalltalk user. Additionally we added Smalltalk code to influence the behavior of the STM. As the RSqueakVM has run in one OS thread so far, we added the capability to start OS threads. Essentially, we added an additional way to launch a new Smalltalk execution context (thread). But in contrast to the original one this one creates a new native OS thread, not a Smalltalk internal green thread.

STM (with automatic transaction boundaries) already solves the problem of concurrent access on one value as this is protected by the STM transactions (to be more precise one instruction). But there are cases were the application relies on the fact that a bigger group of changes is executed either completely or not at all (atomic). Without further information transaction borders could be in the middle of such a set of atomic statements. rstm allows to aggregate multiple statements into one higher level transaction. To let the application mark the beginning and the end of these atomic blocks (high-level transactions), we added two more STM specific extensions to Smalltalk.

Benchmarks

RSqueak was executed in a single OS thread so far. rstm enables us to execute the VM using several OS threads. Using OS threads we expected a speed-up in benchmarks which use multiple threads. We measured this speed-up by using two benchmarks: a simple parallel summation where each thread sums up a predefined interval and an implementation of Mandelbrot where each thread computes a range of predefined lines.

To assess the speed-up, we used one RSqueakVM compiled with rstm enabled, but once running the benchmarks with OS threads and once with Smalltalk green threads. The workload always remained the same and only the number of threads increased. To assess the overhead imposed by the STM transformation we also ran the green threads version on an unmodified RSqueakVM. All VMs were translated with the JIT optimization and all benchmarks were run once before the measurement to warm up the JIT. As the JIT optimization is working it is likely to be adoped by VM creators (the baseline RSqueakVM did that) so that results with this optimization are more relevant in practice than those without it. We measured the execution time by getting the system time in Squeak. The results are:

Parallel Sum Ten Million

| Benchmark Parallel Sum 10,000,000 |

| Thread Count | RSqueak green threads | RSqueak/STM green threads | RSqueak/STM OS threads | Slow down from RSqueak green threads to RSqueak/STM green threads | Speed up from RSqueak/STM green threads to RSQueak/STM OS Threads |

|---|---|---|---|---|---|

| 1 | 168.0 ms | 240.0 ms | 290.9 ms | 0.70 | 0.83 |

| 2 | 167.0 ms | 244.0 ms | 246.1 ms | 0.68 | 0.99 |

| 4 | 167.8 ms | 240.7 ms | 366.7 ms | 0.70 | 0.66 |

| 8 | 168.1 ms | 241.1 ms | 757.0 ms | 0.70 | 0.32 |

| 16 | 168.5 ms | 244.5 ms | 1460.0 ms | 0.69 | 0.17 |

Parallel Sum One Billion

| Benchmark Parallel Sum 1,000,000,000 |

| Thread Count | RSqueak green threads | RSqueak/STM green threads | RSqueak/STM OS threads | Slow down from RSqueak green threads to RSqueak/STM green threads | Speed up from RSqueak/STM green threads to RSQueak/STM OS Threads |

|---|---|---|---|---|---|

| 1 | 16831.0 ms | 24111.0 ms | 23346.0 ms | 0.70 | 1.03 |

| 2 | 17059.9 ms | 24229.4 ms | 16102.1 ms | 0.70 | 1.50 |

| 4 | 16959.9 ms | 24365.6 ms | 12099.5 ms | 0.70 | 2.01 |

| 8 | 16758.4 ms | 24228.1 ms | 14076.9 ms | 0.69 | 1.72 |

| 16 | 16748.7 ms | 24266.6 ms | 55502.9 ms | 0.69 | 0.44 |

Mandelbrot Iterative

| Benchmark Mandelbrot |

| Thread Count | RSqueak green threads | RSqueak/STM green threads | RSqueak/STM OS threads | Slow down from RSqueak green threads to RSqueak/STM green threads | Speed up from RSqueak/STM green threads to RSqueak/STM OS Threads |

|---|---|---|---|---|---|

| 1 | 724.0 ms | 983.0 ms | 1565.5 ms | 0.74 | 0.63 |

| 2 | 780.5 ms | 973.5 ms | 5555.0 ms | 0.80 | 0.18 |

| 4 | 781.0 ms | 982.5 ms | 20107.5 ms | 0.79 | 0.05 |

| 8 | 779.5 ms | 980.0 ms | 113067.0 ms | 0.80 | 0.01 |

Discussion of benchmark results

First of all, the ParallelSum benchmarks show that the parallelism is actually paying off, at least for sufficiently large embarrassingly parallel problems. Thus RSqueak can also benefit from rstm.

On the other hand, our Mandelbrot implementation shows the limits of our current rstm integration. We implemented two versions of the algorithm one using one low-level array and one using two nested collections. In both versions, one job only calculates a distinct range of rows and both lead to a slowdown. The summary of the state of rstm transactions shows that there are a lot of inevitable transactions (transactions which must be completed). One reason might be the interactions between the VM and its low-level extensions, so called plugins. We have to investigate this further.

Limitations

Although the current VM setup is working well enough to support our benchmarks, the VM still has limitations. First of all, as it is based on rstm, it has the current limitation of only running on 64-bit Linux.

Besides this, we also have two major limitations regarding the VM itself. First, the atomic interface exposed in Smalltalk is currently not working, when the VM is compiled using the just-in-time compiler transformation. Simple examples such as concurrent parallel sum work fine while more complex benchmarks such as chameneos fail. The reasons for this are currently beyond our understanding. Second, Smalltalk supports green threads, which are threads which are managed by the VM and are not mapped to OS threads. We currently support starting new Smalltalk threads as OS threads instead of starting them as green threads. However, existing threads in a Smalltalk image are not migrated to OS threads, but remain running as green threads.

Future work for STM in RSqueak

The work we presented showed interesting problems, we propose the following problem statements for further analysis:- Inevitable transactions in benchmarks. This looks like it could limit other applications too so it should be solved.

- Collection implementation aware of STM: The current implementation of collections can cause a lot of STM collisions due to their internal memory structure. We believe it could bear potential for performance improvements, if we replace these collections in an STM enabled interpreter with implementations with less STM collisions. As already proposed by Remi Meier, bags, sets and lists are of particular interest.

- Finally, we exposed STM through languages features such as the atomic method, which is provided through the VM. Originally, it was possible to model STM transactions barriers implicitly by using clever locks, now its exposed via the atomic keyword. From a language design point of view, the question arises whether this is a good solution and what features an stm-enabled interpreter must provide to the user in general? Of particular interest are for example, access to the transaction length and hints for transaction borders to and their performance impact.

Details for the technically inclined

- Adjustments to the interpreter loop were minimal.

- STM works on bytecode granularity that means, there is a implicit transaction border after every bytecode executed. Possible alternatives: only break transactions after certain bytecodes, break transactions on one abstraction layer above, e.g. object methods (setter, getter).

- rstm calls were exposed using primtives (a way to expose native code in Smalltalk), this was mainly used for atomic.

- Starting and stopping OS threads is exposed via primitives as well. Threads are started from within the interpreter.

- For Smalltalk enabled STM code we currently have different image versions. However another way to add, load and replace code to the Smalltalk code base is required to make a switch between STM and non-STM code simple.

Details on the project setup

From a non-technical perspective, a problem we encountered was the huge roundtrip times (on our machines up to 600s, 900s with JIT enabled). This led to a tendency of bigger code changes ("Before we compile, let's also add this"), lost flow ("What where we doing before?") and different compiled interpreters in parallel testing ("How is this version different from the others?") As a consequence it was harder to test and correct errors. While this is not as much of a problem for other RPython VMs, RSqueakVM needs to execute the entire image, which makes running it untranslated even slower.

Summary

The benchmarks show that speed up is possible, but also that the STM overhead in some situations can eat up the speedup. The resulting STM-enabled VM still has some limitations: As rstm is currently only running on 64-bit Linux the RSqueakVM is doing so as well. Eventhough it is possible for us now to create new threads that map to OS threads within the VM, the migration of exiting Smalltalk threads keeps being problematic.

We showed that an existing VM code base can benefit of STM in terms of scaling up. Further it was relatively easy to enable STM support. This may also be valuable to VM developers considering to get STM support for their VMs.

PyPy-STM: first "interesting" release

Hi all,

PyPy-STM is now reaching a point where we can say it's good enough to be a GIL-less Python. (We don't guarantee there are no more bugs, so please report them :-) The first official STM release:

-

pypy-stm-2.3-r2-linux64

(UPDATE: this is release r2, fixing a systematic segfault at start-up on some systems)

This corresponds roughly to PyPy 2.3 (not 2.3.1). It requires 64-bit Linux. More precisely, this release is built for Ubuntu 12.04 to 14.04; you can also rebuild it from source by getting the branch stmgc-c7. You need clang to compile, and you need a patched version of llvm.

This version's performance can reasonably be compared with a regular PyPy, where both include the JIT. Thanks for following the meandering progress of PyPy-STM over the past three years --- we're finally getting somewhere really interesting! We cannot thank enough all contributors to the previous PyPy-STM money pot that made this possible. And, although this blog post is focused on the results from that period of time, I have of course to remind you that we're running a second call for donation for future work, which I will briefly mention again later.

A recap of what we did to get there: around the start of the year we found a new model, a "redo-log"-based STM which uses a couple of hardware tricks to not require chasing pointers, giving it (in this context) exceptionally cheap read barriers. This idea was developed over the following months and (relatively) easily integrated with the JIT compiler. The most recent improvements on the Garbage Collection side are closing the gap with a regular PyPy (there is still a bit more to do there). There is some preliminary user documentation.

Today, the result of this is a PyPy-STM that is capable of running pure Python code on multiple threads in parallel, as we will show in the benchmarks that follow. A quick warning: this is only about pure Python code. We didn't try so far to optimize the case where most of the time is spent in external libraries, or even manipulating "raw" memory like array.array or numpy arrays. To some extent there is no point because the approach of CPython works well for this case, i.e. releasing the GIL around the long-running operations in C. Of course it would be nice if such cases worked as well in PyPy-STM --- which they do to some extent; but checking and optimizing that is future work.

As a starting point for our benchmarks, when running code that only uses one thread, we get a slow-down between 1.2 and 3: at worst, three times as slow; at best only 20% slower than a regular PyPy. This worst case has been brought down --it used to be 10x-- by recent work on "card marking", a useful GC technique that is also present in the regular PyPy (and about which I don't find any blog post; maybe we should write one :-) The main remaining issue is fork(), or any function that creates subprocesses: it works, but is very slow. To remind you of this fact, it prints a line to stderr when used.

Now the real main part: when you run multithreaded code, it scales very nicely with two threads, and less-than-linearly but still not badly with three or four threads. Here is an artificial example:

total = 0

lst1 = ["foo"]

for i in range(100000000):

lst1.append(i)

total += lst1.pop()

We run this code N times, once in each of N threads (full benchmark). Run times, best of three:

| Number of threads | Regular PyPy (head) | PyPy-STM |

| N = 1 | real 0.92s user+sys 0.92s |

real 1.34s user+sys 1.34s |

| N = 2 | real 1.77s user+sys 1.74s |

real 1.39s user+sys 2.47s |

| N = 3 | real 2.57s user+sys 2.56s |

real 1.58s user+sys 4.106s |

| N = 4 | real 3.38s user+sys 3.38s |

real 1.64s user+sys 5.35s |

(The "real" time is the wall clock time. The "user+sys" time is the recorded CPU time, which can be larger than the wall clock time if multiple CPUs run in parallel. This was run on a 4x2 cores machine. For direct comparison, avoid loops that are so trivial that the JIT can remove all allocations from them: right now PyPy-STM does not handle this case well. It has to force a dummy allocation in such loops, which makes minor collections occur much more frequently.)

Four threads is the limit so far: only four threads can be executed in parallel. Similarly, the memory usage is limited to 2.5 GB of GC objects. These two limitations are not hard to increase, but at least increasing the memory limit requires fighting against more LLVM bugs. (Include here snark remarks about LLVM.)

Here are some measurements from more real-world benchmarks. This time, the amount of work is fixed and we parallelize it on T threads. The first benchmark is just running translate.py on a trunk PyPy. The last three benchmarks are here.

| Benchmark | PyPy 2.3 | (PyPy head) | PyPy-STM, T=1 | T=2 | T=3 | T=4 |

translate.py --no-allworkingmodules(annotation step) |

184s | (170s) | 386s (2.10x) | n/a | ||

| multithread-richards 5000 iterations |

24.2s | (16.8s) | 52.5s (2.17x) | 37.4s (1.55x) | 25.9s (1.07x) | 32.7s (1.35x) |

| mandelbrot divided in 16-18 bands |

22.9s | (18.2s) | 27.5s (1.20x) | 14.4s (0.63x) | 10.3s (0.45x) | 8.71s (0.38x) |

| btree | 2.26s | (2.00s) | 2.01s (0.89x) | 2.22s (0.98x) | 2.14s (0.95x) | 2.42s (1.07x) |

This shows various cases that can occur:

- The mandelbrot example runs with minimal overhead and very good parallelization. It's dividing the plane to compute in bands, and each of the T threads receives the same number of bands.

- Richards, a classical benchmark for PyPy (tweaked to run the iterations in multiple threads), is hard to beat on regular PyPy: we suspect that the difference is due to the fact that a lot of paths through the loops don't allocate, triggering the issue already explained above. Moreover, the speed of Richards was again improved dramatically recently, in trunk.

- The translation benchmark measures the time

translate.pytakes to run the first phase only, "annotation" (for now it consumes too much memory to runtranslate.pyto the end). Moreover the timing starts only after the large number of subprocesses spawned at the beginning (mostly gcc). This benchmark is not parallel, but we include it for reference here. The slow-down factor of 2.1x is still too much, but we have some idea about the reasons: most likely, again the Garbage Collector, missing the regular PyPy's very fast small-object allocator for old objects. Also,translate.pyis an example of application that could, with reasonable efforts, be made largely parallel in the future using atomic blocks. - Atomic blocks are also present in the btree benchmark. I'm not completely sure but it seems that, in this case, the atomic blocks create too many conflicts between the threads for actual parallization: the base time is very good, but running more threads does not help at all.

As a summary, PyPy-STM looks already useful to run CPU-bound multithreaded applications. We are certainly still going to fight slow-downs, but it seems that there are cases where 2 threads are enough to outperform a regular PyPy, by a large margin. Please try it out on your own small examples!

And, at the same time, please don't attempt to retrofit threads inside an existing large program just to benefit from PyPy-STM! Our goal is not to send everyone down the obscure route of multithreaded programming and its dark traps. We are going finally to shift our main focus on the phase 2 of our research (donations welcome): how to enable a better way of writing multi-core programs. The starting point is to fix and test atomic blocks. Then we will have to debug common causes of conflicts and fix them or work around them; and try to see how common frameworks like Twisted can be adapted.

Lots of work ahead, but lots of work behind too :-)

Armin (thanks Remi as well for the work).

You're just extracting and running the "bin/pypy"? It works for me on a very close configuration, Ubuntu 14.04 too...

Yes. Sorry, it doesn't make sense to me. You need to debug with gdb, probably with an executable that has got the debugging symbols. You need to either build it yourself, or recompile the pregenerated sources from: https://cobra.cs.uni-duesseldorf.de/~buildmaster/misc/pypy-c-r72356-stm-jit-SOURCE.txz

If I try virtualenv I get:

virtualenv stmtest -p Projekt/pypy-stm-2.3-linux64/bin/pypy

Running virtualenv with interpreter Projekt/pypy-stm-2.3-linux64/bin/pypy

[forking: for now, this operation can take some time]

[forking: for now, this operation can take some time]

New pypy executable in stmtest/bin/pypy

[forking: for now, this operation can take some time]

ERROR: The executable stmtest/bin/pypy is not functioning

ERROR: It thinks sys.prefix is u'/home/ernst' (should be u'/home/ernst/stmtest')

ERROR: virtualenv is not compatible with this system or executable

@Ernst: sorry, it works fine for me as well. I tried the pypy-stm provided here, both on a Ubuntu 12.04 and a Ubuntu 14.04 machine. Maybe you have a too old virtualenv? Does it work with regular PyPy?

Thanks to the author of the now-deleted comments, we could track and fix a bug that only shows up on some Linux systems. If pypy-stm systematically segfaults at start-up for you too, try the "2.3-r2" release (see update in the post itself).

This is exciting! One minor bug in the actual post: you can describe slowdown / speedup in two different ways, with total time as a percentage of original time, or with time difference as a percentage of original time. You mention a 20% slowdown (clearly using the latter standard) and then a 300% slowdown, which you describe as 3x (suggesting that you use the former standard). To be consistent , you should either describe them as 120% and 300%, respectively (using the former standard), or 20% and 200%, respectively (using the latter standard).

Thanks!

Hi again,

just to play around a little I've put together https://github.com/Tinche/stm-playground for myself.

I picked a generic CPU-bound problem (primality testing) and tried comparing multithreaded implementations in CPython 2.7, ordinary PyPy and PyPy-STM.

I figured this would be easily parallelizable (low conflicts) but it doesn't seem to be the case - I don't get all my cores pegged using the STM.

bench-threadpool.py, on my machine, gives about the same time for CPython and PyPy-STM, while ordinary PyPy totally smokes them both (even with the GIL :), one order of magnitude difference (20 sec vs 2 sec).

bench-threadpool-naive will crash the STM interpreter on my system. :)

Getting away from threads, CPython will actually beat PyPy in a multi-process scenario by a factor of 2, which I found surprising. CPython does indeed use up all my cores 100% while dealing with a process pool, while PyPy has won't even come close.

For the same workload, PyPy is actually faster running multithreaded with the GIL than multi-process, and fastest running with only 1 thread (expected, with the GIL only being overhead in this scenario).

This is good news. For many of my applications, an important feature in the next phase will be the optimization for [..] the built-in dictionary type, for which we would like accesses and writes using independent keys to be truly independent [..]. My applications are mostly server applications (Twisted-based and others) that store state information on sessions/transactions in a small number of dictionaries that can have hundreds or thousands of entries concurrently, and would be accessed constantly.

I'm glad I donated and plan do so again in the future :-)

@Tin: I would tweak bench-queue.py to avoid a million inter-thread communications via the queue. For example, run 1000 check_primes instead of just 1 for every number received from the queue.

@Tin: ...no, I tried too and it doesn't seem to help. We'll need to look into this in more details....

@Armin I've pushed a version of bench-queue with a tweakable batch size and concurrency level. Doing the work in batches of, say, 1000 does indeed make it go faster with all implementations.

I've noticed pypy-stm runs have a large variance. It's not like I'm doing scientific measurements here, but for the queue test I'm getting runtimes from ~15 sec to ~27 sec, whereas for example ordinary PyPy is in the range 4.6 sec - 4.9 sec, and CPython ~22.5 - ~24.7, again, relatively close. Again, this is just something I noticed along the way and not the result of serious benchmarking in isolation.

Ooooof. Ok, I found out what is wrong in bench-queue. The issue is pretty technical, but basically if you add "with __pypy__.thread.atomic:" in the main top-level loop in worker(), then it gets vastly faster. On my machine it beats the real-time speed of a regular pypy. See https://bpaste.net/show/450553/

It clearly needs to be fixed...

Added an answer to the question "what about PyPy3?": https://pypy.readthedocs.org/en/latest/stm.html#python-3

@Armin, cool! I've found that the thread pool version can be sped up ~2-3x by wrapping the contents of check_prime with 'atomic' too.

One more observation: with the atomic context manager, on PyPy-STM the queue implementation will beat the thread pool implementation (slightly), which isn't the case for CPython or ordinary PyPy.

If you guys did a facelift on the website like yours HippyVM I believe the project would gain a lot of momentum, it is unfortunate but true that most company managers would visit it and think it is not industrial quality if an employ comes saying that they should sponsor developing something in PyPy.

r2 still doesn't work for me (ubuntu 14.04, intel Core2 CPU T7400)

bash: ./pypy: cannot execute binary file: Exec format error

this is a question for the guys developing PyPy... i am completely new to Python so please bear with me.

here is what i don't understand: it seems to me that you are reinventing the wheel because doesn't the Oracle or Azul Systems JVM already provide a super performant GC and JIT? even STM is becoming available. and since Jython can run on the JVM, why do PyPy at all?

wouldn't a JVM compliant implementation of Python be more performant than PyPy or CPython?

or am i missing something here?

any pointers greatly appreciated. thanks.

Having a JIT in the JVM is very different from having a JIT that can understand Python. For proof, the best (and only) implementation of Python on the JVM, Jython, is running at around CPython speed (generally a bit slower). I suspect that STM is similarly not designed for the purposes to which Jython would put it and would thus perform poorly. The only part that would probably work out of the box would be the GC. A more subtle argument against starting from the JVM is that of semantic mismatch. See for example https://www.stups.uni-duesseldorf.de/mediawiki/images/5/51/Pypy.pdf

PyPy3 2.3.1 - Fulcrum

We're pleased to announce the first stable release of PyPy3. PyPy3

targets Python 3 (3.2.5) compatibility.

We would like to thank all of the people who donated to the py3k proposal

for supporting the work that went into this.

You can download the PyPy3 2.3.1 release here:

https://pypy.org/download.html#pypy3-2-3-1

Highlights

- The first stable release of PyPy3: support for Python 3!

- The stdlib has been updated to Python 3.2.5

- Additional support for the u'unicode' syntax (PEP 414) from Python 3.3

- Updates from the default branch, such as incremental GC and various JIT

improvements - Resolved some notable JIT performance regressions from PyPy2:

- Re-enabled the previously disabled collection (list/dict/set) strategies

- Resolved performance of iteration over range objects

- Resolved handling of Python 3's exception __context__ unnecessarily forcing

frame object overhead

What is PyPy?

PyPy is a very compliant Python interpreter, almost a drop-in replacement for

CPython 2.7.6 or 3.2.5. It's fast due to its integrated tracing JIT compiler.

This release supports x86 machines running Linux 32/64, Mac OS X 64, Windows,

and OpenBSD,

as well as newer ARM hardware (ARMv6 or ARMv7, with VFPv3) running Linux.

While we support 32 bit python on Windows, work on the native Windows 64

bit python is still stalling, we would welcome a volunteer

to handle that.

How to use PyPy?

We suggest using PyPy from a virtualenv. Once you have a virtualenv

installed, you can follow instructions from pypy documentation on how

to proceed. This document also covers other installation schemes.

Cheers,

the PyPy team

As far as I know, a majority of the benchmarks we use have never been ported to Python 3. So it's far more complicated than just push a switch.

Awesome, congrats on the new release! Finally some stable PyPy goodness for Python 3 as well :)

PyPy 2.3.1 - Terrestrial Arthropod Trap Revisited

This release contains several bugfixes and enhancements among the user-facing improvements:

- The built-in struct module was renamed to _struct, solving issues with IDLE and other modules

- Support for compilation with gcc-4.9

- A CFFI-based version of the gdbm module is now included in our binary bundle

- Many issues were resolved since the 2.3 release on May 8

You can download the PyPy 2.3.1 release here:

https://pypy.org/download.html

PyPy is a very compliant Python interpreter, almost a drop-in replacement for CPython 2.7. It's fast (pypy 2.3.1 and cpython 2.7.x performance comparison) due to its integrated tracing JIT compiler.

This release supports x86 machines running Linux 32/64, Mac OS X 64, Windows, and OpenBSD, as well as newer ARM hardware (ARMv6 or ARMv7, with VFPv3) running Linux.

We would like to thank our donors for the continued support of the PyPy project.

The complete release notice is here.

Please try it out and let us know what you think. We especially welcome success stories, please tell us about how it has helped you!

Cheers, The PyPy Team

PyPy 2.3 - Terrestrial Arthropod Trap

This release also contains several bugfixes and performance improvements, many generated by real users finding corner cases. CFFI has made it easier than ever to use existing C code with both cpython and PyPy, easing the transition for packages like cryptography, Pillow(Python Imaging Library [Fork]), a basic port of pygame-cffi, and others.

PyPy can now be embedded in a hosting application, for instance inside uWSGI

You can download the PyPy 2.3 release here:

https://pypy.org/download.html

PyPy is a very compliant Python interpreter, almost a drop-in replacement for CPython 2.7. It's fast (pypy 2.3 and cpython 2.7.x performance comparison; note that cpython's speed has not changed since 2.7.2) due to its integrated tracing JIT compiler.

This release supports x86 machines running Linux 32/64, Mac OS X 64, Windows, and OpenBSD, as well as newer ARM hardware (ARMv6 or ARMv7, with VFPv3) running Linux.

We would like to thank our donors for the continued support of the PyPy project.

The complete release notice is here

Cheers, The PyPy Team

Hi Why don't you accept Bitcoin as one of donation methods? Bitcoin makes it easier to donate your project

I believe that you add it and announce it here, there will be several posts in Reddit and others sources that help you to collect funds

Hey,

Just wondering, does v2.3 contains the fix for issue 1683 titled "BytesIO leaks like hell"?

https://bugs.pypy.org/issue1683

The bug status is set to resolved so one would expect it to be fixed. Please reopen the bug report if you think differently.

There is no info about what what exactly made CFFI easier in this release.

NumPy on PyPy - Status Update

Work on NumPy on PyPy continued in March, though at a lighter pace than the previous few months. Progress was made on both compatibility and speed fronts. Several behavioral issues reported to the bug tracker were resolved. The most significant of these was probably the correction of casting to built-in Python types. Previously, int/long conversions of numpy scalars such as inf/nan/1e100 would return bogus results. Now, they raise or return values, as appropriate.

On the speed front, enhancements to the PyPy JIT were made to support virtualizing the raw_store/raw_load memory operations used in numpy arrays. Further work remains here in virtualizing the alloc_raw_storage when possible. This will allow scalars to have storages but still be virtualized when possible in loops.

Aside from continued work on compatibility/speed of existing code, we also hope to begin implementing the C-level components of other numpy modules such as mtrand, nditer, linalg, and so on. Several approaches could be taken to get C-level code in these modules working, ranging from reimplementing in RPython to interfacing with existing code with CFFI, if possible. The appropriate approach depends on many factors and will probably vary from module to module.

To try out PyPy + NumPy, grab a nightly PyPy and install our NumPy fork. Feel free to report comments/issues to IRC, our mailing list, or bug tracker. Thanks to the contributors to the NumPy on PyPy proposal for supporting this work.

Trying to install scipy on top gives me an error while compiling scipy/cluster/src/vq_module.c; isn't scipy yet supported?

scipy is not supported. Sometimes scipy functions are in fact in numpy in which case you can just copy the code. Otherwise you need to start learning cffi.

You mentioned storage and scalar types. Is it related to this bug

How far is running Pandas on Pypy? Will it be just a recompile when Numpy is ported, or is it heavy work to port Pandas to Pypy after Numpy is done? Should I look after another solution than plan to run Pandas on Pypy?

Any news on the NumPy front? I check this blog for such stuff every week and also contributed to the funding drive.

I fully understand that developers skilled enough to work on such a project are hard to come by even with money, and NumPy support isn't probably the most technologically exciting aspect of PyPy.

Just even a few lines on the latest development or some milestones would show that the project is alive (although I fully understand that writing blog posts isn't everybody's favorite thing). And some kind of summary that in what shape the developers think the code is in. If you prefer coding to blogging, maybe implementing some kind of time-series graph for the numpypy-status page could be nice also (I keep checking it out but can never remember what was the state last time I checked). Maybe I can see if I can do a quick hack via eg archive.org for this.

I think also a huge boost would be to have even a hacky temporary way to interface with Matplotlib and/or SciPy, as it's quite hard to do many practical analyses without these. I'd probably try to do my analyses in such an environment and perhaps even implement/fix at least things that are my own itches. There was the 2011 hack, but it doesn't seem to be elaborated anywhere. I could live with (or even prefer, so it definitely won't become the permanent version) a ugly, slow, memory-hungry and unstable hack that would spam the stderr with insulting messages. But without any way of interfacing the existing stuff it's just too much work for the more complicated analyses.

I'm trying to track the https://bitbucket.org/pypy/numpy branch but it's a bit hard to see the bigger picture just from the commits. Even just some tags and/or meta-issues could be helpful. I'm also a bit confused on where (repo-wise) the development is actually happening. There are some sort of fresh NumPy-branches in the numpy tree. The micronumpy-project is probably dead or merged into the pypy/numpy-branch?

PS. Please don't take this as too strong criticism. I prefer to just silently code away myself too. Just what would be nice to see as somebody eagerly waiting to use Pypy in numerical stuff.

STM results and Second Call for Donations

Hi all,

We now have a preliminary version of PyPy-STM with the JIT, from the new STM documentation page. This PyPy-STM is still not quite useful, failing to top the performance of a regular PyPy by a small margin on most benchmarks, but it's definitely getting there :-) The overheads with the JIT are still a bit too high. (I've been tracking an obscure bug since days. It turned out to be a simple buffer overflow. But if anybody has a clue about why a hardware watchpoint in gdb, set on one of the garbled memory locations, fails to trigger but the memory ends up being modified anyway... and, it turns out, by just a regular pointer write... ideas welcome.)

But I go off-topic :-) The main point of this post is to announce the 2nd Call for Donation about STM. We achieved most of the goals laid out in the first call. We even largely overachieved them in terms of raw performance, even if there are many cases that are unreasonably slow for now. So, after the successful research, we are launching a second proposal about the development part of the project:

-

Polish PyPy-STM to get a consistently reasonable speed, 25%-40% slower than a regular JITted PyPy when running single-threaded code. Of course it is supposed to scale nicely as long as there are no user-visible conflicts.

-

Focus on developing the Python-facing interface: both internal things (e.g. do dictionaries need to be more TM-friendly in general?) as well as directly visible things (e.g. some profiler-like interface to explore common conflicts in a program).

Regular multithreaded code should benefit out of the box, but the final goal is to explore and tweak some existing non-multithreaded frameworks and improve their TM-friendliness. So existing programs using Twisted or Stackless, for example, should run on multiple cores without any major change.

See the full call for more details! I'd like to thank Remi Meier for getting involved. And a big thank you to everybody who contributed money on the first call. It took more time than anticipated, but it's there in good but rough shape. Now it needs a lot of polishing :-)

Armin

it would be good to have compiled stm version for something more recent than Ubuntu 12.04, e.g. 14.04, preferably with numpy included, to simplify numpy installation. Or, maybe, that version for 12.04 works with 14.04?

pygame_cffi: pygame on PyPy

The Raspberry Pi aims to be a low-cost educational tool that anyone can use to learn about electronics and programming. Python and pygame are included in the Pi's programming toolkit. And since last year, thanks in part to sponsorship from the Raspberry Pi Foundation, PyPy also works on the Pi (read more here).

With PyPy working on the Pi, game logic written in Python stands to gain an awesome performance boost. However, the original pygame is a Python C extension. This means it performs poorly on PyPy and negates any speedup in the Python parts of the game code.

One solution to making pygame games run faster on PyPy, and eventually on the Raspberry Pi, comes in the form of pygame_cffi. pygame_cffi uses CFFI to wrap the underlying SDL library instead of a C extension. A few months ago, the Raspberry Pi Foundation sponsored a Cape Town Python User Group hackathon to build a proof-of-concept pygame using CFFI. This hackathon was a success and it produced an early working version of pygame_cffi.

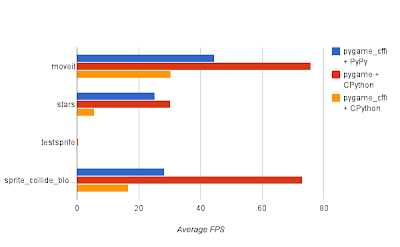

So for the last 5 weeks Raspberry Pi has been funding work on pygame_cffi. The goal was a complete implementation of the core modules. We also wanted benchmarks to illuminate performance differences between pygame_cffi on PyPy and pygame on CPython. We are happy to report that those goals were met. So without further ado, here's a rundown of what works.

Current functionality

- Surfaces support all the usual flags for SDL and OpenGL rendering (more about OpenGL below).

- The graphics-related modules color, display, font and image, and parts of draw and transform are mostly complete.

- Events! No fastevent module yet, though.

- Mouse and keyboard functionality, as provided by the mouse and key modules, is complete.

- Sound functionality, as provided by the mixer and music modules, is complete.

- Miscellaneous modules, cursors, rect, sprite and time are also complete.

With the above-mentioned functionality in place we could get 10+ of the pygame examples to work, and a number of PyWeek games. At the time of writing, if a game doesn't work it is most likely due to an unimplemented transform or draw function. That will be remedied soon.

Performance

In terms of performance, pygame_cffi on PyPy is showing a lot of promise. It beats pygame on CPython by a significant margin in our events processing and collision detection benchmarks, while blit and fill benchmarks perform similarly. The pygame examples we checked also perform better.

However, there is still work to be done to identify and eliminate bottlenecks. On the Raspberry Pi performance is markedly worse compared to pygame (barring collision detection). The PyWeek games we tested also performed slightly worse. Fortunately there is room for improvement in various places.

Invention & Mutable Mamba (x86) Standard pygame examples (Raspberry Pi)Here's a summary of some of the benchmarks. Relative speed refers to the frame rate obtained in pygame_cffi on PyPy relative to pygame on CPython.

| Benchmark | Relative speed (pypy speedup) |

|---|---|

| Events (x86) | 1.41 |

| Events (Pi) | 0.58 |

| N2 collision detection on 100 sprites (x86) | 4.14 |

| N2 collision detection on 100 sprites (Pi) | 1.01 |

| Blit 100 surfaces (x86) | 1.06 |

| Blit 100 surfaces (Pi) | 0.60 |

| Invention (x86) | 0.95 |

| Mutable Mamba (x86) | 0.72 |

| stars example (x86) | 1.95 |

| stars example (Pi) | 0.84 |

OpenGL

Some not-so-great news is that PyOpenGL performs poorly on PyPy since PyOpenGL uses ctypes. This translates into a nasty reduction in frame rate for games that use OpenGL surfaces. It might be worthwhile creating a CFFI-powered version of PyOpenGL as well.

Where to now?

Work on pygame_cffi is ongoing. Here are some things that are in the pipeline:

- Get pygame_cffi on PyPy to a place where it is consistently faster than pygame on CPython.

- Implement the remaining modules and functions, starting with draw and transform.

- Improve test coverage.

- Reduce the time it takes for CFFI to parse the cdef. This makes the initial pygame import slow.

If you want to contribute you can find pygame_cffi on Github. Feel free to find us on #pypy on freenode or post issues on github.

Cheers,

Rizmari Versfeld

Pygame should be an excellent way to benchmark the performance of pypy, so this is great! I wanted to let you fellas know of another project that is using pypy that looks really neat as well... https://github.com/rfk/pypyjs

pygame seems outdated, because it is based on first SDL version.

It will be interesting to see CFFI comparison for newer, SDL2 bindings, such as PySDL2, which is ctypes based at the moment.

https://pypi.python.org/pypi/PySDL2

Anatoly, pygame is outdated but have no clear replacement. PySDL2 is nice, but it's only a low level binding, it does not really help in the case of writing games.

Is it not wrapping the current SDL? I thought that it was... On github it says it's a pygame based wrapper(copies the api) for SDL, would that not make it the current SDL?

I looked into PyOpenGL's code to see if there is an easy way to upgrade to CFFI.

It's a bag of cats EVERYWHERE.

ctypes are defined all over the place, unlike most ctypes->cffi projects, where there is a single source file (api.py) that is easy to convert due to it being the raw interface to the C library.

@Maciej, pygame includes a lot of helpers and good documentation, but it is not perspective technology to play with. I'd say there are more interesting libs out there that gain more interesting results and speeding up dynamic binding for them would be very cool to make things like these - https://devart.withgoogle.com/ - possible.

@Anonymous, if I were to provide OpenGL bindings, I'd start with looking at https://github.com/p3/regal project and binding generator in scripts/

I've actually been working to see if I can get my own Pygame release, Sky Eraser, optimised enough to work on a Raspberry Pi -- it'd be worth seeing how implementing it under this configuration would work on top of the optimisations I've been working on in the background (boy are there a lot to make).

I might also be rewriting the APIs for Allegro 5.1 as an experiment though, to test under both CPython and PyPy.

I started to work on a newer and experimental OpenGL wrapper for Python, proudly blessed PyOpenGLng.

In comparison to PyOpenGL, it generates the requested OpenGL API from the OpenGL XML Registry and use an automatic translator to map the C API to Python. The translator is quite light weight in comparison to PyOpenGL source code. And it is already able to run a couple of examples for OpenGL V3 and V4.

Actually the wrapper use ctypes. But I am looking for tips to do the same for cffi, as well as feedbacks on performance and comments.

The project is hosted on https://github.com/FabriceSalvaire/PyOpenGLng.

@Fabrice, how is your newer and experimental OpenGL wrapper generator is better than existing ones? I am not saying that there is a NIH effect - probably some omission from documentation.

I mean that if PyOpenGL doesn't use wrapper generator then there are a couple around not limiting themselves to Python. I am especially interested to know the comparison with regal.

It was my impression that OpenGL isn't hardware accelerated on the pi anyway... or am I incorrect?

@anatoly: The only real replacement for pygame which I know is pyglet. It is not quite as game-optimized as pygame, but very versatile and a joy to use.

https://pyglet.org

I've actually made a CFFI OpenGL binding, as part of my successor to my old PyGL3Display project. It's not hosted anywhere yet, but I'll see about getting up somewhere soon.

And... done. A mostly drop-in replacement for PyOpenGL on CFFI, or at least for OpenGL 3.2 core spec.

https://www.dropbox.com/s/rd44asge17xjbn2/gl32.zip

@Arne, pyglet rocks, because it is just `clone and run` unlike all other engines. But it looks a little outdated, that's why I started to look for alternatives.

@David, if you want people to comment on this, Bitbucket would be a better way to share sources than Dropbox.

@anatoly techtonick:

Actually, it'll end up on Launchpad in the near future (probably within 2 weeks?). However, it's the output of a wrapper generator and the wrapper generator is in pretty poor shape at the moment, in terms of packaging it's output. I just figured people might be able to use it in the near future, even if it is in 'source-code-dump' form. If there's a better temporary home for it somewhere, I'm all ears.

@David, why reinvent the wheel? There are many wrapper generators around. Also, you project is not a replacement for PyOpenGL, because of GPL restrictions.

@anatoly

I never claimed my project is a replacement for PyOpenGL - it's not API compatible, for a start. Regarding license, it'll probably get changed for the bindings at some point, probably to 3-clause BSD.

On the wrapper generator: Really, the only actively maintained wrapper generator for Python that I'm aware of (which isn't project specific) is SWIG, which is not appropriate (at the very least, googling for 'python wrapper generator -swig' doesn't seem to give many results). In any case, the wrapper generator isn't a lot of code.

@anatoly: pyglet seems to be in maintenance mode right now. There are commits every few days, but only small stuff.

On the other hand I understand that: pyglet supplies everything a backend for a game-engine needs (I use it¹), so the next step should be to use it for many games and see whether shared needs arise.

¹: See https://1w6.org/deutsch/anhang/programme/hexbattle-mit-zombies and https://bitbucket.org/ArneBab/hexbattle/

@David, I am speaking about OpenGL specific wrapper generators. I've added information to this page - https://www.opengl.org/wiki/Related_toolkits_and_APIs#OpenGL_loading_libraries

The OpenGL generator in Python is included in regal project here https://github.com/p3/regal/scripts

pyglet also has one.

Sorry, the correct link is https://github.com/p3/regal/tree/master/scripts

@Arne, kissing elves trick is low. =) Otherwise looks wesnothy and 2D. I don't see why it should use OpenGL. 3D models would be cool.

I'd try to make it run on PySDL2 with "from sdl2.ext.api import pyglet". There is no pyglet API there, but would be interesting to see if it is possible to provide one.

@anatoly

Pyglet's GL wrapper generator creates a lot of chained functions (fairly slow in cPython). I'm also not sure if there's enough development activity in Pyglet to allow modifying core code, and given the size of the Pyglet project I'm not going to fork it. PyOpenGL has more or less the same issues.

Regal appears to be a very large project (a 68MB checkout), which has a scope much greater than just its wrapper generator - the sheer scope of the project does cause some barriers to entry. I'm still looking through, but I am fairly certain that it would take more effort to adapt Regals binding generator than I have expended on my own.

@anatoly: I like kissing elves ☺ (and when I get to write the next part of the story, I intend to keep them as player characters: That someone starts out in an intimate moment does not mean he or she is watchmeat).

@David: I guess modifying core-code in pyglet is not that big of a problem, especially *because* it is mostly being maintained right now: Little danger of breaking the in-progress work of someone else.

@anatoly: more specifically, I do not consider intimate moments as cheap (and WTactics has the image, so I could pull this off). Instead I try to rid myself of baseless inhibitions, though that’s not always easy: Killing off no longer needed societal conditioning is among the hardest battles…

@Arne: Maybe it'd be worth looking at integrating it then; however, it really is a completely different approach - gl32 is a source code writer, whereas Pyglet uses Pythons inbuilt metaprogramming capabilities - and so it would be completely rewriting a large chunk of Pyglets core. Once I've got the binding generator finalised, it might be worth seeing if it's possible to replace Pyglet's OpenGL bindings with these ones.

That said, in the interest of full disclosure: I'm not a fan of Pyglets per object draw method, again in the interests of speed. The per object draw method that Pyglet encourages with its API is not very scalable and eliminates a large number of the advantages of using OpenGL. So whilst I might see if gl32 can be plugged in for interesting benchmarks/proof-of-concept, I probably wouldn't try to get it bug-free and integrated into upstream Pyglet.

@Arne: Regarding Pyglet integration - it seems it would require a lot of work. There's two major issues - firstly, Pyglet only has raw OpenGL bindings, which are used everywhere and hence the "more pythonic" bindings of gl32 would be hard to integrate without editing every file using GL in Pyglet. Secondly, Pyglet uses GL functions which were removed in 3.2, and hence are not in gl32, so the API generator would have to be extended to handle any special cases on these functions.

@David: The per-object draw-method is very convenient for programming. As soon as you need more performance, most of the objects are grouped into batches, though. That way only the draw method of the batch is called and the batch can do all kinds of optimizations.

For Python 3.2 you might find useful stuff in the python-3 port of pyglet, though that hasn’t been released, yet, IIRC.

@Arne:

I'd argue that objects with Z-order would be more convenient programmatically, but frankly that's a matter of opinion. (Incidentally, this is something I'm working on as well, and I think I'm mostly done on it).

However, per-object-draw is only one concern I have on Pyglets speed credentials, as I do not believe Pyglet was written with speed as a design goal. For a different example, see pyglet.graphics.vertexbuffer; copying a ctypes object into a list in order to get slices to work is not a smart thing to do, performance wise!

I'm not sure where you got Python 3.2 from, but what I meant was that currently I'm restricting myself to OpenGL 3.2, which means that certain older OpenGL functions do not exist. Pyglet uses some of these removed functions (e.g. glPushClientAttrib), and hence the bindings I'm generating at the moment do not provide all the features Pyglet uses.

I'd like to remind readers of these comments that this thread has gone farther and farther from both the original post and the whole blog -- which is supposed to be related to PyPy. I'm rather sure that you're now discussing performance on CPython, which in this case is very different from performance on PyPy (or would be if it supported all packages involved). Maybe move this discussion somewhere more appropriate?

@Armin: You’re right… actually I would be pretty interested, though, whether pypy also has a performance issue with pyglet's chained functions.

@Arne: In principal, PyPy seems to handle Pyglets chained functions relatively well (non-scientifically running the Astraea examples title screen sees CPU usage start very high, but eventually drops to about 80% of cPythons after the JIT warms up). There is one caveat preventing better testing: the moment keyboard input is given to Astraea on PyPy, PyPy segfaults.

@David: That is a really important feedback to Armin and and Anatoly, I think.

@David: Can you give some more background on the error (how to get the code, how to reproduce the segfault)?

@Arne: It's as simple as running the Astraea example in Pyglet and pressing a key (under PyPy 2.2, Pyglet 1.2-beta, Ubuntu 14.04). As far as I remember, this has been the case for some time (at least as far back as Ubuntu 12.10/PyPy 2.0 beta - although back then the major issue was PyPy using a lot more CPU; I didn't report this then due to a blog post at the time saying how cTypes would be rewritten). The error reported by Apport is "Cannot access memory at address 0x20"

Doing a cursory scan through other examples, the noisy and text_input examples also have problems. noisy segfaults when a spawned ball collides with a boundary (occasionally giving a partial rpython traceback); text_input appears to have a random chance of any of the input boxes being selectable.

Maybe it's time to file a proper bug report on this...

@Arne: I've now submitted a bug on the PyPy Bug tracker (Issue 1736), with more detail etc. Probably best to move conversation on any Pyglet related issues over there.

STMGC-C7 with PyPy

Hi all,

Here is one of the first full PyPy's (edit: it was r69967+, but the general list of versions is currently here) compiled with the new StmGC-c7 library. It has no JIT so far, but it runs some small single-threaded benchmarks by taking around 40% more time than a corresponding non-STM, no-JIT version of PyPy. It scales --- up to two threads only, which is the hard-coded maximum so far in the c7 code. But the scaling looks perfect in these small benchmarks without conflict: starting two threads each running a copy of the benchmark takes almost exactly the same amount of total time, simply using two cores.

Feel free to try it! It is not actually useful so far, because it is limited to two cores and CPython is something like 2.5x faster. One of the important next steps is to re-enable the JIT. Based on our current understanding of the "40%" figure, we can probably reduce it with enough efforts; but also, the JIT should be able to easily produce machine code that suffers a bit less than the interpreter from these effects. This seems to mean that we're looking at 20%-ish slow-downs for the future PyPy-STM-JIT.

Interesting times :-)

For reference, this is what you get by downloading the

PyPy binary linked above: a Linux 64 binary (Ubuntu 12.04) that

should behave mostly like a regular PyPy. (One main missing feature is

that destructors are never called.) It uses two cores, but obviously

only if the Python program you run is multithreaded. The only new

built-in feature is with __pypy__.thread.atomic: this gives

you a way to enforce that a block of code runs "atomically", which means

without any operation from any other thread randomly interleaved.

If you want to translate it yourself, you need a trunk version of clang with three patches applied. That's the number of bugs that we couldn't find workarounds for, not the total number of bugs we found by (ab)using the address_space feature...

Stay tuned for more!

Armin & Remi

The provided pypy-c crashes when calling fork(). Sadly fork() is indirectly called by a lot of things, including the subprocess module --- which can be executed just by importing random modules...

That sounds pretty huge!

Do you require clang for that? (why is it named on https://foss.heptapod.net/pypy/pypy/-/tree/branch//stmgc-c7/TODO )

Only clang has the address_space extension mention in the blog post; gcc does not.

I want to hear more talks on this. When is your next talk... pycon 2014? It would be hilarious if the pypy group were able to create naive concurrency in python, no one would have seen that coming! Many would have thought, "surely Haskell", or some other immutable, static language would get us there first. But no, it might just be that pypy allows any language that targets it to be concurrent, kiss style...amazing! Anyway, enough gushing, time for a random question. Mainstream vms like the JVM have added ways of speeding up dynamic languages, what advantages does pypy have over these traditional vms(other than the concurrency one that might come to fruition)? I think this would be a good question to answer at the next talk for pypy.

As it turns out there will be no PyPy talk at PyCon 2014.

The JVM runs Jython at a speed that is around that of CPython. PyPy runs substantially faster than this. One difference is that PyPy contains a small number of annotations targeted specifically towards RPython's JIT generator, whereas the JVM has no support for this.

Update containing the most obvious fixes: https://cobra.cs.uni-duesseldorf.de/~buildmaster/misc/pypy-c-r70103-70091-stm.tbz2 (Ubuntu 12.04 Linux 64-bit)

Oh, I do not want to know personally about the superiority of pypy vs the jvm. I was just suggesting a talking point; basically, show others that pypy is a better alternative(for dynamic languages, possibly all languages with naive concurrency working!) then llvm, jvm, etc... I do have a question though, would you suppose that performance of pypy-stm would be better than that of something like the approach clojure has? I have heard that immutable data structures are nice for correctness but that they are bad for performance.

So PyPy-STM is Python without GIL? And it's possible to make it only 20% slower than "regular" PyPy? That would be quite an achievement.

Could you publish a build of PyPy-STM for Debian Stable?

The PyPy-STM we have so far doesn't include any JIT. If you want to try it out anyway on other Linux platforms than Ubuntu, you need to translate it yourself, or possibly hack around with symlinks and LD_LIBRARY_PATH.

> The PyPy-STM we have so far doesn't include any JIT

Yep, that's what blog post said :) But also PyPy-STM doesn't include GIL, does it?

Indeed, which is the point :-) You're welcome to try it out, but I'm just saying that I don't want to go to great lengths to provide precompiled binaries that work on Linux XYZ when I could basically release an updated version every couple of days... It's still experimental and in-progress. Early versions are limited to two cores; later versions to 4 cores. We still have to determine the optimal number for this limit; maybe around 8? (higher numbers imply a bit of extra overheads) It's an example of in-progress work. Another example is that so far you don't get feedback from cross-transaction conflicts; you used to in previous versions, but we didn't port it yet.

PyPy on uWSGI

There is an interview with Roberto De Ioris (from uWSGI fame) about embedding PyPy in uWSGI that covers recent addition of a PyPy embedding interface using cffi and the experience with using it. Read The full interview

Cheersfijal

"We showed that an existing VM code base can benefit of STM in terms of scaling up." I dispute this conclusion: in the benchmarks, it seems that the non-STM version is scaling up well, even better than the STM+OS-threads version. But how can the non-STM version scale at all? It shouldn't: that's a property of RPython. And why is the STM+OS-threads version faster even with just 1 thread? I think you need to answer these questions first. Right now it screams "you are running buggy benchmarks" to me.

I concur with Armin, the conclusions are problematic in the light of the current numbers.

Could you give some more details on the benchmarks? Can I find the Smalltalk code somewhere?

Things that come to mind are details about the scheduler. In the RoarVM, that was also one of the issues (which we did not solve). The standard Squeak scheduling data structure remains unchanged I suppose? How does that interact with the STM, is it problematic that each STM thread updates this shared data structure during every scheduling operation?

Also, more basic, are you making sure that the benchmark processes are running with highest priority (80, IIRC), to avoid interference with other processes in the image?

On the language level, something that could also have an impact on the results is closures. How are they implemented? I suppose similar to the way the CogVM implements them? I suppose, you make sure that closures are not shared between processes?

And finally, what kind of benchmark harness are you using? Did you have a look at SMark? (https://smalltalkhub.com/#!/~StefanMarr/SMark)

We used that one for the RoarVM, and it provides various options to do different kind of benchmarks, including weak-scaling benchmarks, which I would find more appropriate for scalability tests. Weak-scaling means, you increase the problem size with the number of cores. That replicates the scenario where the problem itself is not really parallelizable, but you can solve more problems at the same time in parallel. It also makes sure that each process/thread does the identical operations (if setup correctly).

Well, all those questions aside, interesting work :) Hope to read more soon ;)

You definitely hit a really weak spot in our report... Today we investigated the ParallelSum benchmark again. So far, we've found out that it was indeed partially a problem with the priority of the benchmark process. The preliminary benchmark results make more sense now and as soon as we have stable ones we will update them.

I'll still try to address some of your questions right now. :)

1. Benchmark code

I've just wrapped up the current version of our benchmarks and put them in our repository. You can find the two Squeak4.5 images at the stmgc-c7 branch of the RSqueak Repository . You can find the benchmarks in the CPB package. The Squeak4.5stm image needs the RSqueak/STM VM.

2. Scheduler data structures

Yes, the scheduling data structure is completely unchanged. We have only added a new subclass of Process which overwrites fork and calls a different primitive. However, these Processes are not managed by the Smalltalk scheduler, so there should be no synchronization issues here.

3. Interference of other processes:

This is probably the source of the "speed-up" we observe on the normal RSqueakVM. With more threads we might get a bigger portion of the total runtime. So far, the benchmarks already ran in a VM mode which disables the Smalltalk GUI thread, however in the traces we found that the event handler is still scheduled every now and then. We've done it as you suggested, Stefan, and set the priority to 80 (or 79 to not mess up the timer interrupt handler).

4. Benchmark harness

We actually use SMark and also made sure the timing operations of RSqueak do their job correctly. However we are probably not using SMark at its full potential.

I've just updated the benchmarks. All benchmark processes are now running with the Smalltalk process priority of 79 (80 is the highest). The single-threaded VMs now show the expected behavior.

To further clarify on the Mandelbrot benchmarks: After a discussion with Stefan, I have changed the Mandelbrot implementation. Each job now only has private data and does not read or write in any shared data structure. Still the benchmark results remain the same and we can still observe a high proportion of inevitable transactions.

As Armin pointed out, and which would be a next step, we would need to figure out which parts of the interpreter might cause systematic conflicts.