Nightly graphs of PyPy's performance

Hello.

In the past few months, we made tremendous progress on the JIT front. To monitor the progress daily, we introduced recently some cool graphs that plot revision vs performance. They are based on unladen swallow benchmark runner and they're written entirely in JavaScript, using canvas via the JQuery and Flot libraries. It's amazing what you can do in JavaScript these days... They are also tested via the very good oejskit plugin, that integrates py.test with JavaScript testing, driven by the command line.

As you can probably see, we're very good on some benchmarks and not that great on others. Some of the bad results come from the fact that while we did a lot of JIT-related work, other PyPy parts did not see that much love. Some of our algorithms on the builtin data types are inferior to those of CPython. This is going to be an ongoing focus for a while.

We want to first improve on the benchmarks for a couple of weeks before doing a release to gather further feedback.

Cheers, fijal

Accelerating PyPy development by funding

PyPy has recently made some great speed and memory progress towards providing the most efficient Python interpreter out there. We also just announced our plans for the pypy-1.2 release. Much of this is driven by personal commitment, by individuals and companies investing time and money. Now we'd appreciate some feedback and help regarding getting money into the PyPy project to help its core members (between 5 and 15 people depending how you count) to sustain themselves. We see several options:

- use a foundation structure and ask for tax-exempt donations to the project, its developers and infrastructure. We just got a letter from the Software Freedom Conservancy that they view our application favourably so this option becomes practical hopefully soon.

- offer to implement certain features like a 64bit JIT-backend, Numpy for PyPy or a streamlined installation in exchange for money, contributed in small portions/donations. Do you imagine you or your company would sponsor PyPy on a small scale for efforts like this? Any other bits you'd like to see?

- offer to implement larger scale tasks by contracting PyPy related companies, namely Open End and merlinux who have successfully done such contracts in the past. Please don't hesitate to contact holger@merlinux.eu and bea@openend.se if you want to start a conversation on this.

- apply for public/state funding - in fact we are likely to get some funding through Eurostars, more on that separately. Such funding is usually only a 50-60% percentage of actual employment and project costs, and is tied to research questions rather than to make PyPy a production-useable interpreter, though.

Anything else we should look out for?

cheers & thanks for any feedback, Maciej and Holger

What's the status of possible mobile applications for PyPy? That seems nearer in terms of potential products and thus 'commercial' funding.

Have you guys looked into jitting regular expressions?

I am not quite sure how hard it would be but having very fast regexps would be a great selling point for Pypy.

What about activating the Python Users Groups around the world? I think the case has to be made for PyPy still to the regular folk, if you will. So - what if you conducted a video showing off it's potential, or maybe a series of videos, much like the "Summer of NHibernate" series. All the while, on the same site as the videos, you have a "tips" jar for donations. The videos would serve as great marketing campaign and would invite the development community into the fold, earning the buy-in you seek. This kind of attention in the community would only serve the project well when attracting the larger fish to the pond.

Just my thoughts. :)

@kitblake good point. The main blocker for making PyPy useful on mobile phones is support for GUI apps. Alexander's recent PyPy QT experiments are teasing in this direction. To fully exploit PyPy's super-efficient memory usage we probably need to provide native bindings. That and maybe a GIL-less interpreter would make PyPy a superior choice for mobile devices.

However, GUI-bindings/free threading are orthogonal to the ongoing JIT-efforts. Somehow PyPy suffers a bit from its big potential (there also is stackless and sandboxing etc.). Question is: (How) can we make donation/other funding guide PyPy developments and at the same time support dedicated developers?

What if new PySide code generator targets RPython?

https://www.pyside.org/

Niki, generally thats a viable approach. Pyside is moving to shiboken, a new framework for generating bindings. Somebody would have to check how large the effort is to port it to RPython.

Currently, Pyside is using Boost::Python AFAIK.

could you accept donations via a paypal button or something like that? It's simple and easy but I think it's unlikely to be sufficient.

I'm always amazed at the MoveOn organization... it seems like every week they send out mail like 'hey! we need $400,000 to stop The Man! Can you send us $20?' followed by 'Thanks! We've achieved our goal!'

I don't know how many people or how much each one donates but they always meet their goal!

anonymous: yes, establishing some way to accept money via paypal is high on our list. if nothing else we can use some private trusted account. moveon is rather geared towards general politics, i guess, so not directly applicable. But i remember there was some open source market place which allows to bid for certain features ...

Have you considered moving to any sort of DVCS (Hg, Git, etc)? Or, given your current management style, does a centralized VCS or a DVCS add more to the project?

Googling "open source bounties", finds Stack Overflow suggesting self-hosting bounties for the best results, which I suppose, makes sense. The people interested in taking bounties would be the ones already at your site. Being one of a million bounty providers on a site wouldn't generate much traffic.

Thinking out loud, moving to a DVCS might actually help the bounty process, assuming you'd want to move in that direction.

Planning a next release of PyPy

The PyPy core team is planning to make a new release before the next Pycon US.

The main target of the 1.2 release is packaging the good results we have achieved applying our current JIT compiler generator to our Python interpreter. Some of that progress has been chronicled in recent posts on the status blog. By releasing them in a relatively stable prototype we want to encourage people to try them with their own code and to gather feedback in this way. By construction the JIT compiler should support all Python features, what may vary are the speedups achieved (in some cases the JIT may produce worse results than the PyPy interpreter which we would like to know) and the extra memory required by it.

For the 1.2 release we will focus on the JIT stability first, less on improving non-strictly JIT areas. The JIT should be good at many things as shown by previous blog postings. We want the JIT compiler in the release to work well on Intel 32 bits on Linux, with Mac OS X and Windows being secondary targets. Which compilation targets work will depend a bit on contributions.

In order to finalize the release we intend to have a concentrated effort ("virtual sprint") from the 22nd to the 29th of January. Coordination will happen as usual through the #pypy irc channel on freenode. Samuele Pedroni will take the role of release manager as he already did in the past.

Good News!

Can't wait to try pypy as my standard python vm on my desktop machine.

Btw: Are there any plans yet for python generators support in the jit?

Because thats the only feature that I'm currently missing when using pypy.

I have some medium sized apps, that I'd like to try, but they often use generators, so these will be slower with jit than without, won't they?

@Anonymous.

Generators won't be sped up by JIT. This does not mean that JIT can't run or can't speed up other parts of your program. And yes, there are plans of supporting that.

Cheers,

fijal

I want to get involved in the development of PyPy, but I'm just a student with some experience with compilers. There's any list of junior contributions that can be done by somebody starting?

Thanks!

Leysin Winter Sprint: reported

|

Update: the sprint has been reported to some later date. The next PyPy sprint will probably still be in Leysin, Switzerland, for the seventh time. |

|

It would be nice if there are prebuilt binaries in the next release.

Certainly if it's faster there are a lot of graphics based projects where this would be interesting (pygame, pygelt, cocos2d, shoebot etc).

@Anonymous:

Probably they would be still slower, because ctypes is very slow in PyPy afaik.

Someone mentioned in irc that the long time goal for ctypes is, that the jit doesn't use libffi at all but does direct assembler-to-c calls instead, if I remember correctly. - what should be superfast.

That would of course be absolutely awesome. :)

(and it's also the secret reason, why I only use pypy compatible modules for my pyglet game ;)

Unfortunately I don't know if this is going to happen anytime "soon" / before the 1.2 release (at least I can't find it on extradoc/planning/jit.txt) but I know many people who would instantly drop cpython then. :P

Heck, if I only had more clue about, how difficult this is to implement...

Using CPython extension modules with PyPy, or: PyQt on PyPy

If you have ever wanted to use CPython extension modules on PyPy, we want to announce that there is a solution that should be compatible to quite a bit of the available modules. It is neither new nor written by us, but works nevertheless great with PyPy.

The trick is to use RPyC, a transparent, symmetric remote procedure call library written in Python. The idea is to start a CPython process that hosts the PyQt libraries and connect to it via TCP to send RPC commands to it.

I tried to run PyQt applications using it on PyPy and could get quite a bit of the functionality of these working. Remaining problems include regular segfaults of CPython because of PyQt-induced memory corruption and bugs because classes like StandardButtons behave incorrectly when it comes to arithmetical operations.

Changes to RPyC needed to be done to support remote unbound __init__ methods, shallow call by value for list and dict types (PyQt4 methods want real lists and dicts as parameters), and callbacks to methods (all remote method objects are wrapped into small lambda functions to ease the call for PyQt4).

If you want to try RPyC to run the PyQt application of your choice, you just need to follow these steps. Please report your experience here in the blog comments or on our mailing list.

- Download RPyC from the RPyC download page.

- Download this patch and apply it to RPyC by running patch -p1 < rpyc-3.0.7-pyqt4-compat.patch in the RPyC directory.

- Install RPyc by running python setup.py install as root.

- Run the file rpyc/servers/classic_server.py using CPython.

- Execute your PyQt application on PyPy.

PyPy will automatically connect to CPython and use its PyQt libraries.

Note that this scheme works with nearly every extension library. Look at pypy/lib/sip.py on how to add new libraries (you need to create such a file for every proxied extension module).

Have fun with PyQt

Alexander Schremmer

OT: you should separate labels by commas, so that Blogspot recognizes them as distinct labels.

These sound interesting. Could you please elaborate? A link would suffice, if these are already documented by non-pypy people. Thanks!

cool stuff, alexander! Generic access to all CPython-provided extension could remove an importing blocker for PyPy usage, allows incremental migrations.

Besides, I wonder if having two processes, one for application and one for bindings can have benefits to stability.

Dear anonymous,

the StandardButtons bug was already communicated to a Nokia employee.

If you are interested in the segfaults, contact me and I give you the source code that I used for testing.

This is an important step forward!

There are probably two reasons why people use extensions: bindings to libraries and performance.

Unfortunately this specific approach does not address performance. Is there anything on horizon that would allow near-CPython API for extensions. So modules would just need to be recompiled against PyPy bindings for CPython API? Probably not 100% compatible, but close?

Any chances of that happening?

Andraz Tori, Zemanta

In theory, this is possible, but a lot of work. Nobody has stepped up to implement it, yet.

Isn't the exposure of refcounts in the CPython C API going to be a bit of a problem for implementing the API on pypy? perhaps a "fake" refcount could be associated with an object when it is first passed to an extension? This could still be problematic if the extension code expects to usefully manipulate the refcount, or to learn anything by examining it...

Indeed, it would be part of the task to introduce support in the GCs for such refcounted objects. Note that real refcounting is necessary because the object could be stored in an C array, invisible to the GC.

I'm trying to think of ways around that, but any API change to make objects held only in extensions trackable by the GC would probably be much worse than adding refcounted objects, wouldn't it, unless the extension were written in rpython...

Some benchmarking

Hello.

Recently, thanks to the surprisingly helpful Unhelpful, also known as Andrew Mahone, we have a decent, if slightly arbitrary, set of performances graphs. It contains a couple of benchmarks already seen on this blog as well as some taken from The Great Computer Language Benchmarks Game. These benchmarks don't even try to represent "real applications" as they're mostly small algorithmic benchmarks. Interpreters used:

- PyPy trunk, revision 69331 with --translation-backendopt-storesink, which is now on by default

- Unladen swallow trunk, r900

- CPython 2.6.2 release

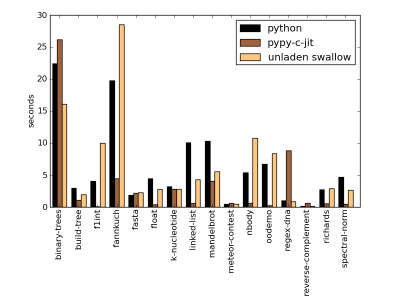

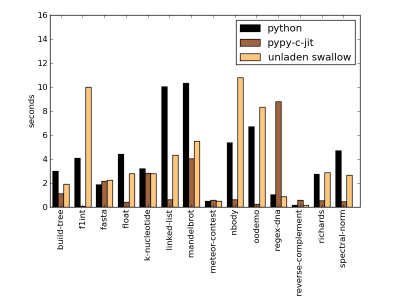

Here are the graphs; the benchmarks and the runner script are available

And zoomed in for all benchmarks except binary-trees and fannkuch.

And zoomed in for all benchmarks except binary-trees and fannkuch.

As we can see, PyPy is generally somewhere between the same speed as CPython to 50x faster (f1int). The places where we're the same speed as CPython are places where we know we have problems - for example generators are not sped up by the JIT and they require some work (although not as much by far as generators & Psyco :-). The glaring inefficiency is in the regex-dna benchmark. This one clearly demonstrates that our regular expression engine is really, really, bad and urgently requires attention.

The cool thing here is, that although these benchmarks might not represent typical python applications, they're not uninteresting. They show that algorithmic code does not need to be far slower in Python than in C, so using PyPy one need not worry about algorithmic code being dramatically slow. As many readers would agree, that kills yet another usage of C in our lives :-)

Cheers,fijal

Wow! This is getting really interesting. Congratulations!

By the way, it would be great if you include psyco in future graphs, so speed junkies can have a clearer picture of pypy's progress.

Very interesting, congratulations on all the recent progress! It would be very interesting to see how PyPy stacks up against Unladen Swallow on Unladen Swallow's own performance benchmark tests, which do include a bit more real-world scenarios.

@Eric: yes, definitely, we're approaching that set of benchmarks

@Luis: yes, definitely, will try to update tomorrow, sorry.

It's good, but...

We are still in the realms of micro-benchmarks. It would be good to compare their performances when working on something larger. Django or Zope maybe?

These last months, you seem to have had almost exponential progress. I guess all those years of research are finally paying off. Congratulations!

Also, another graph for memory pressure would be nice to have. Unladen Shadow is (was?) not very good in that area, and I wonder how PyPy compares.

[nitpick warning]

As a general rule, when mentioning trunk revisions, it's nice to also mention a date so that people know the test was fair. People assume it's from the day you did the tests, and confirming that would be nice.

[/nitpick warning]

What about memory consumption? That is almost as important to me as speed.

Congratulations !

Please could you remember us how to build and test pypy-jit ?

I'm curious why mandelbrot is much less accelerated than, say, nbody. Does PyPy not JIT complex numbers properly yet?

@Anon Our array module is in pure Python and much less optimized than CPython's.

How long until I can do

pypy-c-jit translate.py -Ojit targetpypystandalone.py

?

So far, when I try, I get

NameError: global name 'W_NoneObject' is not defined

https://paste.pocoo.org/show/151829/

ASFAIU it's not PyPy's regex engine being "bad" but rather the fact that the JIT generator cannot consider and optimize the loop in the regex engine, as it is a nested loop (the outer one being the bytecode interpretation one).

@holger: yes, that explains why regexps are not faster in PyPy, but not why they are 5x or 10x slower. Of course our regexp engine is terribly bad. We should have at least a performance similar to CPython.

Benjamin, is it really an issue with array? The inner loop just does complex arithmetic. --Anon

@Anon, @Benjamin

I've just noticed that W_ComplexObject in objspace/std/complexobject.py is not marked as _immutable_=True (as it is e.g. W_IntObject), so it is totally possible that the JIT is not able to optimize math with complexes as it does with ints and floats. We should look into it, it is probably easy to discover

guys, sorry, who cares about *seconds*??

why didn't you normalize to the test winners? :)

So, um, has anyone managed to get JIT-ed pypy to compile itself?

When I tried to do this today, I got this:

https://paste.pocoo.org/show/151829/

@Leo:

yes, we know that bug. Armin is fixing it right now on faster-raise branch.

antonio: good point. On the second thought, though, it's not a *really* good point because we don't have _immutable_=True on floats either...

@Maciej Great! It'll be awesome to have a (hopefully much faster??) JITted build ... it currently takes my computer more than an hour ...

Would perhaps also be nice to compare the performance with one the current Javascript-Engines(V8, SquirrelFish etc.)

Nice comparisons - and micro-performance looking good. Congratulations.

HOWEVER - there is no value in having three columns for each benchmark. The overall time is arbitrary, all that matters is relative so you might as well normalise all graphs to CPython = 1.0, for example. The relevant informtion is then easier to see!

Tom is right, normalizing the graphs to cpython = 1.0 would make them much more readable.

Anyway, this is a very good Job from Unhelpful.

Thanks!

glad to see someone did something with my language shootout benchmark comment ;)

I checked https://www.looking-glass.us/~chshrcat/python-benchmarks/results.txt but it doesn't have the data for unladen swallow. Where are the number?

Düsseldorf Sprint Report

While the Düsseldorf is dwindling off, we put our minds to the task of retelling our accomplishments. The sprint was mostly about improving the JIT and we managed to stick to that task (as much as we managed to stick to anything). The sprint was mostly filled with doing many small things.

Inlining

Carl Friedrich and Samuele started the sprint trying to tame the JIT's inlining. Until now, the JIT would try to inline everything in a loop (except other loops) which is what most tracing JITs actually do. This works great if the resulting trace is of reasonable length, but if not it would result in excessive memory consumption and code cache problems in the CPU. So far we just had a limit on the trace size, and we would abort tracing when the limit was reached. This would happen again and again for the same loop, which is not useful at all. The new approach introduced is to be more clever when tracing is aborted by marking the function with the largest contribution to the trace size as non-inlinable. The next time this loop is traced, it usually then gives a reasonably sized trace.

This gives a problem because now some functions that don't contain loops are not inlined, which means they never get assembler code for them generated. To remedy this problem we also make it possible to trace functions from their start (as opposed to just tracing loops). We do that only for functions that can not be inlinined (either because they contain loops or they were marked as non-inlinable as described above).

The result of this is that the Python version telco decimal benchmark runs to completion without having to arbitrarily increase the trace length limit. It's also about 40% faster than running it on CPython. This is one of the first non-tiny programs that we speed up.

Reducing GC Pressure

Armin and Anto used some GC instrumentation to find places in pypy-c-jit that allocate a lot of memory. This is an endlessly surprising exercise, as usually we don't care too much about allocations of short-lived objects when writing RPython, as our GCs usually deal well with those. They found a few places where they could remove allocations, most importantly by making one of the classes that make up traces smaller.

Optimizing Chains of Guards

Carl Friedrich and Samuele started a simple optimization on the trace level that removes superfluous guards. A common pattern in a trace is to have stronger and stronger guards about the same object. As an example, often there is first a guard that an object is not None, later followed by a guard that it is exactly of a given class and then even later that it is a precise instance of that class. This is inefficient, as we can just check the most precise thing in the place of the first guard, saving us guards (which take memory, as they need resume data). Maciek, Armin and Anto later improved on that by introducing a new guard that checks for non-nullity and a specific class in one guard, which allows us to collapse more chains.

Improving JIT and Exceptions

Armin and Maciek went on a multi-day quest to make the JIT and Python-level exceptions like each other more. So far, raising and catching exceptions would make the JIT generate code that has a certain amusement value, but is not really fast in any way. To improve the situation, they had to dig into the exception support in the Python interpreter, where they found various inefficiencies. They also had to rewrite the exceptions module to be in RPython (as opposed to just pure Python + an old hack). Another problems is that tracebacks give you access to interpreter frames. This forces the JIT to deoptimize things, as the JIT keeps some of the frame's content in CPU registers or on the CPU stack, which reflective access to frames prevents. Currently we try to improve the simple cases where the traceback is never actually accessed. This work is not completely finished, but some cases are already significantly faster.

Moving PyPy to use py.test 1.1

Holger worked on porting PyPy to use the newly released py.test 1.1. PyPy still uses some very old support code in its testing infrastructure, which makes this task a bit annoying. He also gave the other PyPy developers a demo of some of the newer py.test features and we discussed which of them we want to start using to improve our tests to make them shorter and clearer. One of the things we want to do eventually is to have less skipped tests than now.

Using a Simple Effect Analysis for the JIT

One of the optimization the JIT does is caching fields that are read out of structures on the heap. This cache needs to be invalidated at some points, for example when such a field is written to (as we don't track aliasing much). Another case is a call in the assembler, as the target function could arbitrarily change the heap. This of course is imprecise, since most functions don't actually change the whole heap, and we have an analysis that finds out which sorts of types of structs and arrays a function can mutate. During the sprint Carl Friedrich and Samuele integrated this analysis with the JIT, to help it invalidate caches less aggressively. Later Anto and Carl Friedrich also ported this support to the CLI version of the JIT.

Miscellaneous

Samuele (with some assistance of Carl Friedrich) set up a buildbot slave on a Mac Mini at the University. This should let us stabilize on the Max OS X. So far we still have a number of failing tests, but now we are in a situation to sanely approach fixing them.

Anto improved the CLI backend to support the infrastructure for producing the profiling graphs Armin introduced.

The guinea-pigs that were put into Carl Friedrich's care have been fed (which was the most important sprint task anyway).

Samuele & Carl Friedrich

Great news and a nice read. Out of curiosity, did you also improve performance for the richards or pystone benchmarks?

this is a very fascinating project and i enjoy reading the blog even if i am not really a computer scientist and don't have a very deep understanding of many details. :)

something i always wonder about... wouldn't it be possible to use genetic algorithms in compiler technology? like a python to machine code compiler that evolves to the fastest solution by itself? or is there still not enough computing power for something like that?

Düsseldorf Sprint Started

The Düsseldorf sprint starts today. Only Samuele and me are there so far, but that should change over the course of the day. We will mostly work on the JIT during this sprint, trying to make it a lot more practical. For that we need to decrease its memory requirements some more and to make it use less aggressive inlining. We will post more as the sprint progresses.

PyPy on RuPy 2009

Hello.

It's maybe a bit late to announce, but there will be PyPy talk at Rupy conference this weekend in Poznan. Precisely, I'll be talking mostly about PyPy's JIT and how to use it. Unfortunately the talk is on Saturday, at 8:30 in the morning.

EDIT: Talk is online, together with examples

Cheers,fijal

I, and many interested with me, appreciate links to slides, videos or transcripts of the talk once it has been held. PyPy is exciting! Good luck in Poznan.

All materials for pypy talks are available in talk directory.

Cheers,

fijal

Logging and nice graphs

Hi all,

This week I worked on improving the system we use for logging. Well, it was not really a "system" but rather a pile of hacks to measure in custom ways timings and counts and display them. So now, we have a system :-)

The system in question was integrated in the code for the GC and the JIT, which are two independent components as far as the source is concerned. However, we can now display a unified view. Here is for example pypy-c-jit running pystone for (only) 5000 iterations:

The top long bar represents time. The bottom shows two summaries of the total time taken by the various components, and also plays the role of a legend to understand the colors at the top. Shades of red are the GC, shades of green are the JIT.

Here is another picture, this time on pypy-c-jit running 10 iterations of richards:

We have to look more closely at various examples, but a few things immediately show up. One thing is that the GC is put under large pressure by the jit-tracing, jit-optimize and (to a lesser extent) the jit-backend components. So large in fact that the GC takes at least 60-70% of the time there. We will have to do something about it at some point. The other thing is that on richards (and it's likely generally the case), the jit-blackhole component takes a lot of time. "Blackholing" is the operation of recovering from a guard failure in the generated assembler, and falling back to the interpreter. So this is also something we will need to improve.

That's it! The images were generated with the following commands:

PYPYLOG=/tmp/log pypy-c-jit richards.py python pypy/tool/logparser.py draw-time /tmp/log --mainwidth=8000 --output=filename.pngEDIT: nowadays the command-line has changed to:

python rpython/tool/logparser.py draw-time /tmp/log --mainwidth=8000 filename.png

Nice work.

I think you'll cause a revolution when this project delivers its goals, opening python (and other dynamic languages) to a much wider range of uses.

ooh, pretty graphs :) It's been very good to follow pypy progress through the blog.

Can the gc/jit be made to take up a maximum amount of time, or be an incremental process? This is important for things requiring real time - like games, audio, multimedia, robots, ninjas etc.

A note, that some other languages do gc/jit in other threads. But I imagine, pypy is concentrating on single threaded performance at the moment.

I'm sure you're aware of both those things already, but I'm interested to see what the pypy approach to them is?

cu,

So... what's a revision number that I can use? Am I just supposed to guess? The page should have a reasonable default revision number.

for anyone else looking, 70700 is a reasonable place to start. (The graphs are really nice by the way, I'm not hating!)

a couple of suggestions:

1. scale for X axis (dates are likely to be more interesting than revision numbers)

1a. scale for Y axis

2. Add another line: unladen swallow performance

+1 for Anonymous's suggestions 1 and 2.

This is cool.

Unladen Swallow's perf should also be considered if possible.

Hey.

Regarding revisions - by default it points to the first one we have graphs from, so you can just slice :) Also, yeah, revision numbers and dates should show up, will fix that. We don't build nightly unladen swallow and we don't want to run it against some older version, because they're improving constantly.

Cheers,

fijal

Wonderful idea, great implementation (axis are needed, tooltips would be interesting for long series), impressive results.

I hope you guys exploit this to raise interest in PyPy in this pre-release period. Just take a look at the response you get to posts involving numbers, benchmarks, etc. (BTW, keep linking to the funding post) :)

A series of short posts discussing hot topics would be a sure way to keep Pypy around the news until the release, so you get as much feedback as possible.

Suggestions:

- Possible factors in slower results (discuss points in the Some Benchmarking post);

- One-of comparisons to different CPython versions, Unladen Swallow, ShedSkin, [C|J|IronP]ython (revisit old benchmarks posts?);

- Mention oprofile and the need for better profiling tools in blog, so you can crowdsource a review of options;

- Ping the Phoronix Test Suite folks to include Pypy translation (or even these benchmarks) in their tests: Python is an important part of Linux distros;

- Don't be afraid to post press-quotable numbers and pics, blurbs about what Pypy is and how much it's been improving, etc. Mention unrelated features of the interpreter (sandboxable!), the framework (free JIT for other languages), whatever;

- The benchmark platform (code, hardware, plans for new features).

Regarding comparison with unladen swallow: I think having a point per month would be good enough for comparison purposes.

@Anonymous: Great suggestions! I'll look at this issues. In fact, things like profiling has been highly on our todo list, but we should advertise it more. We surely miss someone who'll be good at PR :-)

Something's wrong with plot one's scale: the speed ups are represented by a first line of 2x, a second one of 4x and the third one is 8x. Shouldn't it be 6x instead?